Introduction to Light and Energy

Mankind has always been dependent upon energy from the sun's light both directly for warmth, to dry clothing, to cook, and indirectly to provide food, water, and even air. Our awareness of the value of the sun's rays revolves around the manner in which we benefit from the energy, but there are far more fundamental implications derived from the relationship between light and energy. Whether or not mankind devises ingenious mechanisms to harness the sun's energy, our planet and the changing environment contained within is naturally driven by the energy of sunlight.

We know that if the sun ever failed to rise, our weather would turn to the coldest winter within hours, lakes and rivers would freeze worldwide, and plants and animals would quickly begin to perish. Engines would fail to work, and we would have no way to transport food or fuel, or to generate electrical power. With limited fuel for fires, humans would soon have no source of light or heat. However, with our current knowledge of the solar system, we can be quite confident that the sun will rise tomorrow, as it has every day since the Earth first condensed from a gaseous cloud of space debris. In the not-too-distant past humans were not quite as confident. They could neither explain why the sun moved across the sky, nor how it produced the light that distinguished day from night. Many civilizations, recognizing the sun's importance, worshipped our closest star as a deity (see Figure 1) in hopes of preventing it from disappearing.

The amount of energy falling on the Earth's surface from the sun is approximately 5.6 billion billion (quintillion) megajoules per year. Averaged over the entire Earth's surface, this translates into about 5 kilowatt-hours per square meter every day. The energy input from the sun in a single day could supply the needs for all of the Earth's inhabitants for a period of about 3 decades. Obviously, there is no means conceivable (nor is it necessary) to harness all of the energy that is available; equally obvious is that capturing even a small fraction of the available energy in a useable form would be of enormous value.

Even though the total amount of energy reaching Earth's atmosphere from the sun is mind-staggering, it is not very highly concentrated compared to other sources of energy that we use, such as fire, incandescent lamps, and electric stove burners. Therefore, any method of capturing solar energy must be applied over relatively large areas, and to be useful, effective means of concentrating the energy are required. Only in the last few decades has mankind begun to search in earnest for mechanisms to harness the tremendous potential of solar energy. This intense concern has resulted from a continuing increase in energy consumption, growing environmental problems from the fuels that are now consumed, and an ever-present awareness about the inevitable depletion of fossil fuel resources upon which we have become so heavily dependent.

Providing Energy for Life

The sun's energy is intimately associated with the existence of all living organisms presently on the planet, and the manner in which early life forms developed on the primordial Earth, ultimately evolving into their present forms. Scientists now realize that plants absorb water and carbon dioxide from the environment, and utilizing energy from the sun (Figure 2), turn these simple substances into glucose and oxygen. With glucose as a basic building block, plants synthesize a number of complex carbon-based biochemicals used to grow and sustain life. This process is termed photosynthesis, and is the cornerstone of life on Earth.

Scientists are still unraveling the complex mechanisms through which photosynthesis takes place, but the process has existed for millions of years and was a very early adaptation in the evolutionary history of life. The first living creatures were chemotrophs, who thrived by obtaining energy from simple chemical reactions. From these primitive organisms, cells evolved that were able to obtain needed energy from photosynthesis, producing oxygen as a byproduct. The simplest of these organisms were the cyanobacteria. Single-celled prokaryotes of this type are the oldest living inhabitants of our planet, and they are believed to have been the dominant life form on Earth for more than 2 billion years. Geologists have found huge rock-like mats of fossilized cyanobacteria, termed stromatolites, that are over 3 billion years old (several examples can be found in shallow waters off coastal Australia).

Before photosynthetic organisms developed, there was very little oxygen in the Earth's atmosphere, but once the oxygen-producing process began, the potential then existed for organisms to evolve that could make use of oxygen. Because of the tremendous amount of energy available from the sun, the ability to derive the components necessary for life from the solar supply made possible far more complex life forms than had been possible before the photosynthetic process evolved.

A majority of plant species propagate in soil, and if removed or uprooted, they will perish. For centuries, humans believed that plants live by feeding on soil. Careful measurements of growing plant weights were conducted by Belgian scientist, Jan Baptista van Helmont, in the early seventeenth century. Van Helmont demonstrated that a growing plant gained much more in weight than the soil lost, and speculated that the plant had fed on something other than the soil. He ultimately concluded that plant growth was due, in part, to water. Over half a century later, English physiologist Stephen Hales discovered that plants also need air to grow, and, to his surprise, found that plants absorb carbon dioxide from the air.

English chemist Joseph Priestley was the first investigator to find that plants release oxygen when they are healthy and growing. His experiments documented the process of photosynthesis, and indicated that respiration and photosynthesis are related processes, but work in opposite directions. Priestley's most famous experiment (around 1772) demonstrated that a candle would quickly extinguish if placed in a bell jar, but would burn again in the same air if a plant were left in the container for several days. He concluded that the plant had "restored" air that had been "injured" by burning the candle. In further experiments Priestley demonstrated that a mouse placed in the jar would "injure" the air in the same way as a candle, but could then breathe the air after it was "restored", leading to the concept that respiration and photosynthesis are opposite processes. In Priestley's words, "the air would neither extinguish a candle, nor was it at all inconvenient to a mouse which I put into it". Priestley had discovered a substance that would later be named oxygen by the French chemist Antoine Laurent Lavoisier, who extensively investigated the relationship between combustion and air.

| Interactive Java Tutorial | |||||||||||

|

|||||||||||

A key component to the understanding of photosynthesis was still missing until Dutch physiologist Jan Ingenhousz determined in 1778 that plants only absorb carbon dioxide and release oxygen when they are exposed to light. Finally, German physicist, Julius Robert Mayer, formalized the concept that energy was being transformed from light to produce new chemicals in growing plants. Mayer believed that a specialized chemical process (now known as oxidation) was the ultimate source of energy for a living organism.

Photosynthesis, meaning "putting together by light", is the process by which almost all plants, some bacteria, and a few protistans harness the energy in sunlight to produce sugar (and oxygen as a byproduct). The conversion of light energy into chemical energy is dependent on the substance chlorophyll, a green pigment that bestows upon plant leaves their green appearance. Not all plants have leaves, but the ones that do are very efficient at converting solar into chemical energy. As such, leaves can be thought of as biological solar collectors, equipped with numerous tiny cells that carry out photosynthesis on a microscopic level.

A pigment is defined as any substance that absorbs and reflects visible light. A majority of pigments are coloring agents, displaying specific colors that are dependent upon the wavelength distribution of light reflected and absorbed. Each pigment has its own characteristic absorption spectrum, which determines the portion of the spectrum over which the pigment is effective at collecting energy from light. Chlorophyll, a biochemical that is common to all photosynthetic organisms, reflects green (intermediate) wavelengths, and absorbs energy from the violet-blue and reddish-orange wavelengths at opposite ends of the visible light spectrum.

Chlorophyll is a complex molecule that exists in several modifications or isomers in plants and other photosynthetic organisms. All organisms that carry out photosynthesis contain the variety known as chlorophyll a. Many other organisms also contain accessory pigments, including other chlorophylls, carotenoids, and xanthophylls, which absorb other wavelengths in the visible spectrum. In this manner, plants may be tailored for specific environmental factors that affect the nature of the light available to them in their particular niche. Factors such as water depth and quality strongly influence the wavelengths of light available in different aquatic and marine environments, and play a large role in the photosynthetic function of different phytoplankton and other protistan species.

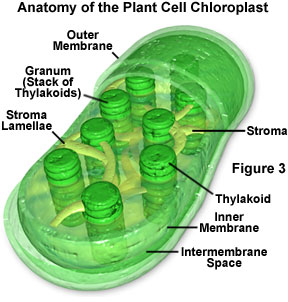

When a pigment absorbs light energy, the energy can either be dissipated as heat, emitted at a longer wavelength as fluorescence, or it can trigger a chemical reaction. Certain membranes and structures in photosynthetic organisms serve as the structural units of photosynthesis because chlorophyll will only participate in chemical reactions when the molecule is associated with proteins embedded in a membrane (such as chloroplasts, for example; Figure 3). Photosynthesis is a two-stage process, and in organisms that have chloroplasts, two different areas of these structures house the individual processes. A light-dependent process (often termed light reactions) takes place in the grana, while a second light-independent process (dark reactions) subsequently occurs in the stroma of chloroplasts (see Figure 3). It is believed that the dark reactions can take place in the absence of light as long as the energy carriers developed in the light reactions are present.

The first stage of photosynthesis occurs when the energy from light is directly utilized to produce energy carrier molecules, such as adenosine triphosphate (ATP). In this stage, water is split into its components, and oxygen is released as a by-product. The energized transportation vehicles are subsequently utilized in the second and most fundamental stage of the photosynthetic process: production of carbon-to-carbon covalent bonds. The second stage does not require illumination (a dark process), and is responsible for providing the basic nutrition for the plant cell, as well as building materials for cell walls and other components. In the process, carbon dioxide is fixed along with hydrogen to form carbohydrates, a family of biochemicals that contain equal numbers of carbon atoms and water molecules. Overall, the photosynthetic process does not allow living organisms to directly utilize light energy, but instead involves energy capture in the first stage followed by a second stage of complex biochemical reactions that converts the energy into chemical bonds.

Photoelectric Phenomena

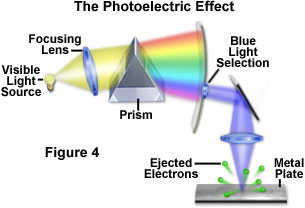

A fundamental question that arose among scientists, as early as the 1700s, was the potential effect that light has on matter, and the nature and implications of this interaction. By the nineteenth century, investigators had determined that light could produce electrical charges when exposed to the surface of certain metals. Later studies led to the discovery that this phenomenon, now termed the photoelectric effect, induces a freeing or liberation of electrons bound to the atoms in the metal (Figure 4). In 1900, the German physicist, Philipp Lenard, confirmed the source of the charge generation to be electron emission, and found unexpected relationships between the wavelength of the light and the energy and number of electrons released. By using light of specific wavelengths (selected by a prism), Lenard demonstrated that the energy from the released electrons depends only on the wavelength of light and not the intensity. Low intensity light produced fewer electrons, but each electron has the same amount of energy, regardless of the light intensity. Furthermore, Lenard found that shorter wavelengths of light liberated electrons having more energy than those freed by longer wavelengths.

Lenard concluded that the intensity of light determines the number of electrons released by the photoelectric phenomenon, and that the wavelength of the light determines the amount of intrinsic energy contained in each liberated electron. At the time, this unusual interaction between light and matter presented a dilemma that classical physics was unable to explain. The photoelectric effect was but one of several theoretical problems that physicists were encountering around 1900 as a result of widespread belief in the wave theory of light. It was left for another German physicist, Max Planck, to propose an alternative theory. Planck postulated that light, and other forms of electromagnetic radiation, were not continuous, but composed of discrete packets (quanta) of energy. His quantum theory, for which he received the Nobel prize in physics in 1918, explained how light could, in some situations, be thought of as particles that are equivalent to energy quanta, as the followers of Isaac Newton had also believed two hundred years earlier.

Albert Einstein relied on Planck's quantum principles to explain the photoelectric effect in a fundamental theory that would reconcile the continuous wave nature of light with its particle behavior. The logic behind Einstein's explanation is that light of a single wavelength behaves as if it consists of discrete particles, now known as photons, which all have the same energy. The photoelectric effect occurs because each displaced electron is the result of a collision between one photon from the light and one electron in the metal. Light of greater intensity results only in more photons impacting the metal per unit time, with correspondingly more electrons being ejected. The energy of each emitted electron depends upon the wavelength (frequency) of the light causing the emission, with higher frequencies producing electrons having more energy. The direct proportionality between photon energy and light frequency is described by Planck's fundamental postulate of quantum theory, which is the concept that links particle theory and wave theory, and which was later developed into the science of quantum mechanics.

Planck originally proposed the fundamental relationship between energy and frequency as part of his theory on the mechanism by which solids emit radiation when heated (blackbody radiation). The famous postulate states that energy (E) of an incoming photon is equal to the light frequency (f) when multiplied by a constant (h), now termed Planck's constant. The simple relationship is expressed as:

The photoelectric effect is manifested in three different forms: photoemissive, photoconductive, and photovoltaic, with the latter being the most significant with respect to the conversion of light energy into electrical energy. The photoemissive effect occurs when light strikes a prepared metal surface, such as cesium, and transfers sufficient energy to eject electrons into free space adjacent to the surface. In a photoelectric cell, the emitted electrons are attracted by a positive electrode, and when a voltage is applied, a current is subsequently created that is linearly proportional to the intensity of the light incident on the cell. The photoemissive effect has been thoroughly described for higher energy ranges, such as the X-ray and gamma ray spectral regions, and cells of this type are commonly used to detect and study phenomena at these energy levels.

A variety of materials exhibit a marked change in conductivity when irradiated, and their photoconductive properties can be utilized to trigger electrical devices, as well as in other applications. In highly conductive substances, such as metals, the change in conductivity may be insignificant. In semiconductors, however, this change can be quite large. Because the increase in electrical conductivity is proportional to the light intensity striking the material, the current flow in an external circuit will increase with light intensity. This type of cell is commonly employed in light sensors to perform tasks such as turning on and off street lights and home lighting.

The Photovoltaic Effect and Solar Cells

Solar cells convert light energy into electrical energy, either indirectly by first converting it into heat, or through a direct process known as the photovoltaic effect. The most common types of solar cells are based on the photovoltaic effect, which occurs when light falling on a two-layer semiconductor material produces a potential difference, or voltage, between the two layers. The voltage produced in the cell is capable of driving a current through an external electrical circuit that can be utilized to power electrical devices.

In 1839, French physicist Edmund Becquerel discovered that light shining on two identical electrodes immersed in a weakly-conducting solution will produce a voltage. This effect was not very efficient at producing electricity, and because there were no practical applications at the time, it remained merely a curiosity for many years. Several decades later, the photoconductivity of selenium was discovered by Willoughby Smith while he was testing materials to develop underwater telegraph cables. A description of the first selenium photocell was published in 1877, and a great deal of interest resulted from the photovoltaic effect being observed in a solid. An American inventor, Charles Fritts, created the first solar cells made from selenium wafers in 1883, although his cells had a conversion efficiency of only about 1-2 percent. Practical commercial or industrial applications were not readily apparent, and by the beginning of the twentieth century (following the invention of the electric light bulb), generation of electricity by turbines had become widespread. Interest in the photovoltaic effect quickly waned, and most research in the field became focused on the control and applications of electricity.

| Interactive Java Tutorial | |||||||||||

|

|||||||||||

A comprehensive understanding of the phenomena involved in the photovoltaic effect would not arrive about until the quantum theory was developed. Early photovoltaic applications were primarily in sensing or measurement of light, such as in photographic light meters, rather than in the production of electrical energy. The necessary stimulus for research in this arena came from Einstein's description of the photoelectric effect and the early experiments using crude photoelectric cells. The first practical solar cells arose from the discovery of photoelectric properties in doped silicon semiconductors. Solar modules produced by Bell Laboratories in 1954 were made from similar silicon derivatives, and operated at efficiencies near 6 percent. By 1960, photovoltaic cells had progressed to efficiency ratings approaching 14 percent, which is a level significant enough to produce useful devices.

Today, the most common photovoltaic cells employ several layers of doped silicon, the same semiconductor material used to make computer chips. Their function depends upon the movement of charge-carrying entities between successive silicon layers. In pure silicon, when sufficient energy is added (for example, by heating), some electrons in the silicon atoms can break free from their bonds in the crystal, leaving behind a hole in an atom's electronic structure. These freed electrons move about randomly through the solid material searching for another hole with which to combine and release their excess energy. Functioning as free carriers, the electrons are capable of producing an electrical current, although in pure silicon there are so few of them that current levels would be insignificant. However, silicon can be modified by adding specific impurities that will either increase the number of free electrons (n-silicon), or the number of holes (missing electrons; also referred to as p-silicon). Because both holes and electrons are mobile within the fixed silicon crystalline lattice, they can combine to neutralize each other under the influence of an electrical potential. Silicon that has been doped in this manner has sufficient photosensitivity to be useful in photovoltaic applications.

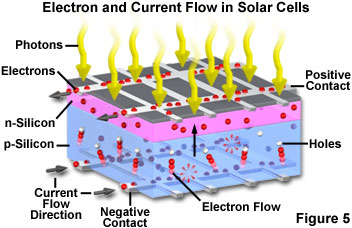

In a typical photovoltaic cell, two layers of doped silicon semiconductor are tightly bonded together (see Figure 5). One layer is modified to have excess free electrons (termed an n-layer), while the other layer is treated to have an excess of electron holes or vacancies (a p-layer). When the two dissimilar semiconductor layers are joined at a common boundary (termed a p-n junction), the free electrons in the n-layer cross into the p-layer in an attempt to fill the electron holes. The combining of electrons and holes at the p-n junction creates a barrier that makes it increasingly difficult for additional electrons to cross. As the electrical imbalance reaches an equilibrium condition, a fixed electric field results across the boundary separating the two sides.

When light of an appropriate wavelength (and energy) strikes the layered cell and is absorbed, electrons are freed to travel randomly. Electrons close to the boundary (the p-n junction) can be swept across the junction by the fixed field. Because the electrons can easily cross the boundary, but cannot return in the other direction (against the field gradient), a charge imbalance results between the two semiconductor regions. Electrons being swept into the n-layer by the localized effects of the fixed field have a natural tendency to leave the layer in order to correct the charge imbalance. Towards this end, the electrons will follow another path if one is available. By providing an external circuit by which the electrons can return to the other layer, a current flow is produced that will continue as long as light strikes the solar cell. In the construction of a photovoltaic cell, metal contact layers are applied to the outer faces of the two semiconductor layers, and provide a path to the external circuit that connects the two layers. The final result is production of electrical power derived directly from the energy of light.

The voltage produced by solar cells varies with the wavelength of incident light, but typical cells are designed to use the broad wavelength spectrum of daylight provided by the sun. The amount of energy produced by the cell is wavelength-dependent with longer wavelengths generating less electricity than shorter wavelengths. Because commonly available cells produce only about as much voltage as a flashlight battery, hundreds or even thousands must be coupled together in order to produce enough electricity for demanding applications. A number of solar-powered automobiles have been built and successfully operated at highway speeds through the use of a large number of solar cells. In 1981, an aircraft known as the Solar Challenger, which was encased with 16,000 solar cells producing over 3,000 watts of power, was flown across the English Channel powered solely by sunlight. Feats such as these inspire interest in expanding the uses of solar power. However, the use of solar cells is still in its infancy, and these energy sources are still largely restricted to powering low demand devices.

Current photovoltaic cells employing the latest advances in doped silicon semiconductors convert a average of 18 percent (reaching a maximum of about 25 percent) of the incident light energy into electricity, compared to about 6 percent for cells produced in the 1950s. In addition to improvements in efficiency, new methods are also being devised to produce cells that are less expensive than those made from single crystal silicon. Such improvements include silicon films that are grown on much less expensive polycrystalline silicon wafers. Amorphous silicon has also been tried with some success, as has the evaporation of thin silicon films onto glass substrates. Materials other than silicon, such as gallium arsenide, cadmium telluride, and copper indium diselenide, are being investigated for their potential benefits in solar cell applications. Recently, titanium dioxide thin films have been developed for potential photovoltaic cell construction. These transparent films are particularly interesting because they can also serve double duty as windows.

Passive and Active Solar Energy

Although solar cells convert light directly into electrical energy, indirect means can also utilize light to produce energy in the form of heat. These mechanisms can be divided into two generalized classes: passive and active solar energy systems. Passive systems depend upon absorption of heat without associated mechanical motion. As an example, the solar oven is nothing more than an insulated box with a glass cover and black interior, which can reach temperatures exceeding 100 degrees Celsius in strong, direct sunlight. These temperatures can be utilized to cook food, and in developing countries or areas with limited fuel resources, such a simple tool can provide a significant enhancement to the quality of life.

| Interactive Java Tutorial | |||||||||||

|

|||||||||||

Active solar energy systems typically rely on using sunlight to heat a fluid, followed by channeling the heated fluid to another area where it is needed. Small-scale hot water systems already provide a majority of the bathing and wash water needs in some areas of the world. These simple devices consist of black water piping sandwiched between glass plates, and insulated to accumulate as much heat as possible. Large-scale active systems utilize arrays of mirrors to focus light onto a central collector, which can be, for example, a boiler that produces steam to power turbines. Solar farms employing several hundred or thousands of parabolic mirrors can produce enough steam from water pumped through the collector to generate tens of megawatts of power during the daylight hours.

Solar Conversion to Combustible Fuels - Hydrogen

Although solar energy exists in an inexhaustible supply that is available at no cost (and is non-polluting), the conversion of light energy from the sun is associated with numerous problems that severely limit efficient applications. The most desirable situation would be to devise a mechanism to convert solar energy into a compact and portable form that could easily be transported to distant locations. Many research endeavors are aimed at using concentrated solar energy to achieve the high temperatures necessary to drive various chemical reactions, often using chemical catalysts to produce different combinations of gaseous fuels that can be easily stored and transported. Some of the possibilities are promising, but most experts in the field of energy conversion agree that the ultimate fuel to be derived from solar energy conversion is hydrogen.

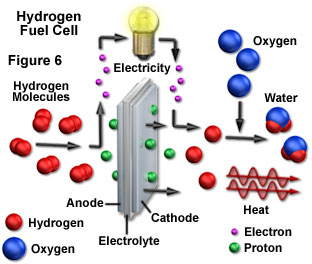

The appeal of hydrogen as a fuel is overwhelming. Molecular hydrogen is made from the lightest element in the universe, and can easily be stored and transported. Furthermore, hydrogen can be derived from water with molecular oxygen as the only byproduct. When hydrogen is burned, it combines with oxygen in the air to form water once again, thereby regenerating the source material. Most importantly, during the entire cycle that releases energy in a usable form, none of the intermediate steps produces significant quantities of pollutants. As long as the sun continues to produce its light energy, the hydrogen supply is inexhaustible. Currently, hydrogen is used primarily as a rocket fuel (in the form of catalytic fuel cells as illustrated in Figure 6), and as a component of a number of industrial chemical processes. However, with readily achieved modifications, the smallest element could fulfill all of mankind's electricity and transportation requirements.

Although hydrogen can be produced directly from water, some form of energy input is required to carry out the separation from oxygen. One means of driving the reaction is to use an electric current in a process termed electrolysis, and sunlight can be used to generate the electricity for the conversion. Electrolysis involves an oxidation-reduction reaction in which current passed through an electrode pair immersed in water produces hydrogen and oxygen gases at the opposite electrodes. Another possible route for hydrogen generation is to concentrate sunlight at high enough temperatures to cause the thermal decomposition of water into its oxygen and hydrogen components, which can then be separated.

Ultimately, a more sophisticated means of splitting water to generate molecular hydrogen would be desirable. One technique from which the separation might be achieved is to harness the sun's energy through chemical reactions in a manner similar to the photosynthesis process used by plants and bacteria. When they are exposed to sunlight, green chlorophyll-containing plants continually split water molecules, releasing oxygen and combining hydrogen with carbon dioxide to form sugars. If the first part of this, or a similar process, can be duplicated, a limitless supply of hydrogen would be available, driven by solar energy input.

A significant effort is focused on the development of artificial photosynthesis, which on a fundamental level, can be described as photo-induced charge separation at molecularly defined interfaces. One of the desired goals of this research is the development of light-controlled enzymes and even molecular-scale electronics, which involve the transfer of charge carriers in response to light and chemical activity. Another objective of this research is the biotechnological production of substances such as enzymes and pigments. In recent years bacteria and similar organisms that degrade oil have been utilized to clean up spills. Currently, scientists are attempting to perfect ways of using organisms that live and grow on solar energy for a variety of bioremediation purposes, such as cleaning up contaminated water supplies.

Under certain conditions, algae can be induced to switch off their normal photosynthetic sequence at a particular stage and produce significant amounts of hydrogen. By preventing the cells from burning stored fuel in the usual manner, the algae are forced to activate an alternative metabolic pathway that results in production of hydrogen in significant amounts. This discovery raises hopes that someday hydrogen fuel can be produced from sunlight and water through the photosynthetic process using large-scale photobioreactor complexes. Recent investigations have uncovered marine bacteria that contain the light-absorbing pigment proteorhodopsin, which enables them to convert sunlight into cellular energy without relying on chlorophyll. This discovery raises the possibility of using easily manipulated bacteria, such as E. coli, in light-driven energy generators having numerous applications in both the physical and life sciences.

Photoelectric Imaging Applications: Conversion of Light into Electrical Signals

One of the most common applications of the photoelectric effect is in devices used to detect photons that carry image information in cameras, microscopes, telescopes and other imaging devices. With the growth of digital imaging technologies, rapid progress has occurred in the technology employed to convert light into meaningful electrical signals. Several types of light detectors are in common use. Some collect signals that have image information with no spatial discrimination, while others are area detectors that more directly capture images with spatial and intensity information combined. Light detectors that are based on the photoelectric effect include photomultiplier tubes, avalanche photodiodes, charge-coupled devices, image intensifiers, and complementary metal-oxide semiconductor (CMOS) photo sensors. Of these, the charge-coupled device is suited for the widest range of imaging and detection tasks, and is therefore the most commonly employed. The principles that underlie its operation are also fundamental to the function of the other types of detectors.

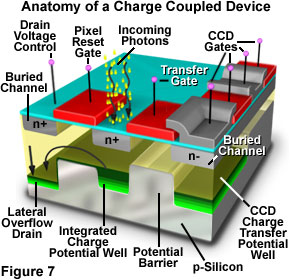

The charge-coupled device (CCD) is a silicon-based integrated circuit consisting of a dense matrix, or array, of photodiodes that operate by converting light energy in the form of photons into an electronic charge. Each photodiode in the array operates on a principle similar to that of the photovoltaic cell, but in the CCD electrons generated by the interaction of photons with silicon atoms are stored in a potential well and can subsequently be transferred across the chip through registers and output to an amplifier. The schematic diagram presented in Figure 7 illustrates various components that comprise the anatomy of a typical CCD.

CCDs were invented in the late 1960's by research scientists at Bell Laboratories, who initially conceived the idea as a new type of memory circuit for computers. Later studies indicated that the device, because of its ability to transfer charge and its electrical response to light, would also be useful for other applications such as signal processing and imaging. Early hopes of a new memory device have all but disappeared, but the CCD has emerged as one of the leading candidates for an all-purpose electronic imaging detector, capable of replacing film in the emerging field of digital imaging, both for general use and in specialized fields such as digital photomicrography.

Fabricated on silicon wafers much like other integrated circuits, CCDs are processed in a series of complex photolithographic steps that involve etching, ion implantation, thin film deposition, metallization, and passivation to define various functions within the device. The silicon substrate is electrically doped to form p-type silicon, a material in which the main carriers are positively charged electron holes. When an ultraviolet, visible, or infrared photon strikes a silicon atom resting in or near a CCD photodiode, it will usually produce a free electron and a "hole" created by the temporary absence of the electron in the silicon crystalline lattice. The free electron is then collected in a potential well (located deep within the silicon in an area known as the depletion layer), while the hole is forced away from the well and eventually is displaced into the silicon substrate. Individual photodiodes are isolated electrically from their neighbors by a channel stop, which is formed by diffusing boron ions through a mask into the p-type silicon substrate.

| Interactive Java Tutorial | |||||||||||

|

|||||||||||

The principal architectural feature of the CCD is a vast array of serial shift registers constructed with a vertically stacked conductive layer of doped polysilicon separated from a silicon semiconductor substrate by an insulating thin film of silicon dioxide. After electrons have been collected within each photodiode of the array, a voltage potential is applied to the polysilicon electrode layers (termed gates) to change the electrostatic potential of the underlying silicon. The silicon substrate positioned directly beneath the gate electrode then becomes a potential well capable of collecting locally generated electrons created by the incident light. Neighboring gates help to confine electrons within the potential well by forming zones of higher potentials, termed barriers, surrounding the well. By modulating the voltage applied to polysilicon gates, they can be biased to either form a potential well or a barrier to the integrated charge collected by the photodiode.

After being illuminated by incoming photons during a period termed integration, potential wells in the CCD photodiode array become filled with electrons produced in the depletion layer of the silicon substrate. The charge stored in each well must then be read out in a systematic manner. Measurement of this stored charge is accomplished by a combination of serial and parallel transfers of the accumulated charge to a single output node at the edge of the chip, where it connects to an output amplifier. The speed of parallel charge transfer is usually sufficient to be accomplished during the period of charge integration for the next image.

Following collection in the potential wells, electrons are shifted in parallel, one row at a time, by a signal generated from the vertical shift register clock. The vertical shift register clock operates in cycles to change the voltages on alternate electrodes of the vertical gates in order to move the accumulated charge across the CCD. After traversing the array of parallel shift register gates, the charge eventually reaches a specialized row of gates known as the serial shift register. Here, the packets of electrons representing each pixel are shifted horizontally in sequence, under the control of a horizontal shift register clock, toward an output amplifier and off the chip. A CCD typically has only one read-out amplifier positioned at the corner of the entire photodiode array. In the output amplifier, electron packets register the amount of charge produced by successive photodiodes from left to right in a single row starting with the first row and proceeding to the last. This produces an analog raster scan of the photo-generated charge from the entire two-dimensional array of photodiode sensor elements.

Conversion of Energy Into Light

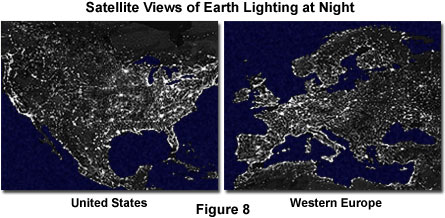

Because of the magnitude and significance of the solar light and energy reaching the Earth, the reverse conversion from other forms of energy into light seems almost trivial. However, recent photographs taken from spacecraft and satellites of the Earth at night reveal that in heavily populated areas, humans are succeeding in producing a considerable amount of light by converting electrical energy sources (Figure 8). Other natural processes also take place that generate light, often accompanied by heat. Whether occurring naturally or with human assistance and ingenuity, light can be generated from mechanical, chemical, or electrical energy conversion mechanisms. Figure 8 is a composite of hundreds of photographs taken of the Earth by satellites. The light from manmade sources clearly delineates major population centers in North America and Western Europe, as illustrated in the figure.

At some point in the distant past humans learned how to use fire in beneficial ways. Lightning-generated fires probably provided the first artificial light sources, and these natural fires would have been maintained as an asset for as long as possible. If the flames went out, a new fire source would have to be found by hunting and collecting. The earliest success at intentionally making fire was most likely the result of producing heat and embers from the friction of rubbing sticks together, or "striking" sparks by impacting specific rocks or minerals together, which would ignite some easily combustible material placed nearby. The Romans are known to have used burning tar-coated torches as a portable light source over 2000 years ago. Fire was not only useful, but had great symbolic significance in many early cultures and in their mythology. Dating from ancient Greek tradition, the Olympic games today are still launched with the ceremonial "bringing of fire" from Greece to the site of the event.

Mankind has used the combustion of some form of fuel combined with oxygen from the air to provide light (as well as heat) for thousands of years, and an inevitable path of progress has been followed as improvements in both function and safety were sought. After it was learned that animal fat and oils from plants burned with a bright yellow light, there was great demand for these oils, and much of it was derived from sea animals, such as whales and seals. Burning oil is difficult to control, and wicks were added to lamps to control the rate of burning and to prevent dangerous flare ups. Oil lamps are known to have been used for over 10,000 years. Candles are an adaptation of the oil lamp that provides fuel in a solid, more convenient, form. The earliest of these employed tallow or beeswax, while modern candles are primarily made from paraffin derived from petroleum. Further development in the use of flame to provide light occurred during the nineteenth century, when gaslights became widely used in cities and towns.

Matches that are utilized to ignite other combustible substances make use of a chemical reaction to produce flame. The matchsticks are usually coated with phosphorus compounds that ignite in the presence of oxygen when they are heated by friction through rubbing or striking on an abrasive surface. So-called safety matches must be lighted by rubbing (striking) on a special surface, and will not ignite by inadvertent contact with other surfaces. Chemical compounds in the match head and the striking surface combine to create an initial spark that starts a chemical reaction leading to combustion of the match.

The conversion of electrical energy into light began to be practical in the 1800s with the development of the arc lamp. These lamps function by causing an electrical current to jump across a gap between two carbon rods, resulting in a sustained bright arc of light. Although they were capable of producing much brighter light than the older candle or gaslight methods, arc lamps required constant maintenance and were a fire hazard. In 1879, both Joseph Swan in Britain, and Thomas Edison in the United States, demonstrated electric lamps that used current-heated carbon filaments sealed in partially evacuated glass envelopes. Because the glass "bulbs" of these lamps were pumped to a partial vacuum, and contained very little oxygen, the filaments would not catch fire, but would get very hot and glow brightly.

Modern electric lamps use three different processes to produce light from the electrical energy supplied to them. Standard incandescent lamps, derived directly from the early models of the 1800s, now commonly utilize a tungsten filament in an inert gas atmosphere, and produce light by the resistive effect that causes the filament to get hot when electrical current is passed through. The fluorescent lamp is a more energy efficient type that produces light from the fluorescent illumination of a phosphor coating on the inner surface of a glass tube. The coating, termed a phosphor, is stimulated to fluoresce by ultraviolet radiation that is emitted when current flows through a gas in the tube. The third type of lamp commonly in use is the vapor lamp, which incorporates gases such as mercury or sodium to produce visible light when an electrical current flows through the volume of gas. These lamps can be of the high or low pressure variety, and emit light having spectral characteristics that depend upon the gas and other substances that are incorporated into the lamp.

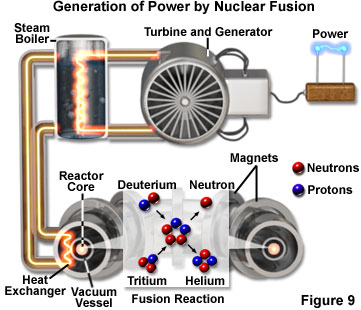

Perhaps the most fundamental process that converts energy into light is similar to the sun's source of heat and light - the process of nuclear fusion. Scientists have been able to produce fusion reactions for only about half a century, but such reactions have been going on continuously in the universe for billions of years. Fusion is a process in which two lighter atomic nuclei collide to form a single heavier nucleus (see Figure 9). The resulting nucleus has less mass than the combined masses of the two fusing nuclei, and the lost mass is converted into energy, in accordance with Einstein's mass/energy equivalence equation. Fusion reactions are the source of the energy output from most stars, including our sun. Thus, the warmth of the sun and its light are the product of nuclear fusion, which form the basis for all life on Earth.

When initially formed, a star contains hydrogen and helium that may have been created in the origin of the universe. Hydrogen nuclei continue to collide, forming helium nuclei, which then collide to form heavier nuclei, and so on, in a chain of nuclear synthesis reactions. The fusion of different hydrogen isotopes into a helium isotope produces over a million times more energy than a typical chemical reaction. This basic reaction that drives the sun will continue until the hydrogen supply is nearly exhausted and the sun evolves into a giant red star, growing in size to engulf the Earth and the inner planets.

Man's first experiments with nuclear fusion led to the development of the hydrogen bomb. Research is being conducted today that may have the more beneficial application for using controlled fusion reactions to generate clean inexpensive power. Calculations of the rate at which the sun is consuming its original hydrogen supply indicate that we may only have about 5 billion more years of reliable energy from this source in which to work on our version of fusion. Hopefully, this time period may be long enough.

Contributing Authors

Kenneth R. Spring - Scientific Consultant, Lusby, Maryland, 20657.

Thomas J. Fellers and Michael W. Davidson - National High Magnetic Field Laboratory, 1800 East Paul Dirac Dr., The Florida State University, Tallahassee, Florida, 32310.